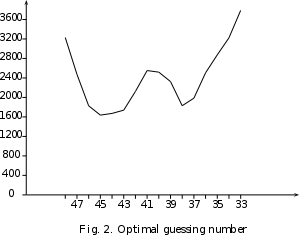

Timings from the article "Attacking Bivium Using SAT Solvers". The authors didn't seem to have randomised the problems enough: the time to solve should increase exponentially, but instead it goes up and down like a roller coaster

Vegard Nossum asked me about the varying time it took for CryptoMiniSat to solve a certain instance that was satisfiable. This inspired me to write an overly long reply, which I think might interest others. So, why does the solving time vary for a specific instance if we permutate the clauses? Intuitively, just by permutating the clauses, the problem itself doesn’t get any easier or harder, so why should that make any difference at all? I will now try to go through the reasons, though they can almost all be summed up as follows: the SAT solver has absolutely no clue what it is solving, and so to solve a problem, it relies on heuristics and randomisation, both of which are influenced by its starting seed, which in turn is influenced by the order of the clauses in the CNF file. And now on to the long explanation.

Let’s divide problems into two categories: satisfiable and unsatisfiable instances. First, let me talk about satisfiable instances. Let’s suppose that we have been solving the problem for some time, and we have connected the dots (variables) well enough through learnt clauses. All that the SAT solver is waiting for is a good guess to set 3-4 variables right, and then it will propagate all variables to the right setting to satisfy all clauses. The problem is therefore to get to the point of only needing to guess 3-4 variables, i.e. to connect the dots, which we do through resolution. We can sacrifice some or all of dot-connecting (i.e. resolution) with some more guessing, and this can in fact be coded down by simply foregoing some of the conflict analysis we do. In the extreme case, all conflict analysis can be disabled, and then the solver would not restart its search. The solver would in essence be brute-forcing the instance through BCP (boolean constraint propagation). It is easy to see why this latter is prone to variation: depending on the ordering of the variables in the search tree, the tree could be orders of magnitude smaller or larger, and the first solution can be at any point in the search tree, essentially at a random place.

If we decide to carry out resolution for satisfiable problems to counter the problem of the variation of the search-tree, it is interesting to realise the following: in most cases, we can not forgo guessing. The reason is simple yet leads to quite interesting properties. Essentially, a SAT instance can have multiple solutions. If there are two solutions, e.g. 01100... and 10011... i.e. the solutions are the complete inverse of one another, then the SAT solver will not be able to prove any variable to any value. The best it could do is to create binary clauses, in the example case for instance

var1=0 -> var2=1, var3=1, var4=0...

and

var1=1 -> var2=0, var3=0, var4=1...

If we do enough resolutions, the “guessing” part will eventually become a solution-selector, i.e. it will select a solution from the set of available solutions. If there are 2^20, evenly distributed in the search space, we might need to set 20 variables before a solution is found through a simple application of BCP. Naturally, SAT solvers don’t normally do this, as they are content in finding one satisfying assignment, reporting that to the user, and exiting. It would be interesting to know the average remaining number of variables that needed to be guessed to solve the solution at the point the SAT solver actually found the solution, but this has not been done yet as far as I know. Then, we would know the trade-off the SAT solver employs between resolution and searching when solving satisfiable instances.

All right, so much for satisfiable instances. What happens with UNSAT instances? Well, the solver must either go through the whole tree and realise there is no solution, or do resolution until it reaches an empty clause, essentially building a resolution tree with an empty clause at its root. Since both of these can be done at the same time, there is a similar trade-off as above, but this time it’s somewhat upside-down. First of all, the search tree can be smaller or larger depending on the ordering of the variables (as before), and secondly, the resolution tree can be smaller or larger depending on the order of resolutions. The minimal resolution tree is (I believe) NP-hard to find, which doesn’t help, but there is at least a minimum resolution tree that limits us, and there is a minimum search tree which we must go through completely, that limits us. So, in contrast to finding satisfying solutions, both of these are complete in some sense, which should make searching for them robust in terms of speed. Finding a satisfying solution is not complete, because, as I noted above, SAT solvers don’t find all satisfying solutions — if they did, they would actually have the same properties as solving an unsatisfiable problem, as they would have to prove that there are no more solutions remaining, essentially proving unsatisfiability.

The above reasoning is probably the reason why SAT solvers tend to behave more robustly when they encounter an unsatisfiable problem. In particular, CryptoMiniSat’s running time seems more robust when solving unsatisfiable problems. Also, for problems that contain a lot of randomly placed solutions in the search space, such as the (in)famous vmpc_33 and vmpc_34 problems, the solving times seem wholly unpredictable for at least two interesting SAT solvers: lingeling and CryptoMiniSat.