CryptoMinisat 3.1 has been released. The short changelog is:

$ git diff cryptoms-3.0 cryptoms-3.1 --shortstat 84 files changed, 3079 insertions(+), 2751 deletions(-)

The changes made were threefold. First, memory usage has been greatly reduced. This is crucial, because memory usage was over 7GB on certain instances. Secondly, the implication cache wasn’t very well-used and an idea that came to my mind greatly improved performance on most problems. Finally, time limiting of some inprocessing techniques on certain types of problems has been improved.

Memory usage reduction

On instances that produced a lot of long learnt clauses the memory usage was very high. These learnt clauses were all automatically linked in to the occurrence list and consequently took large amounts of memory, sometimes up to 10GB. On other instances, the original clauses were too numerous and too large, so putting even them into the occurrence list was too much. On these instances, variable elimination is not carried out (or carried out only later, when enough original clauses have been removed/shortened). To debug some of these problems, I wrote a fuzzer that generates extremely large problems with many binary and many long clauses, it’s available here as “largefuzzer”. It’s actually quite nice with many-many binary clauses so it also can fuzz the problems encountered with probing of extremely weird and large instances.

Implied literal usage improvement

CryptoMiniSat uses implied literals, i.e. caches what literals were propagated by each literal during probing. It then re-uses this information to subsume and/or strengthen clauses. This is kind of similar to stamping though uses more memory. It is actually useful to have alongside stamping, and I now do both — propagating DFS that stamping requires is expensive though updating cache during DFS is just as easy as during quasi-BFS.

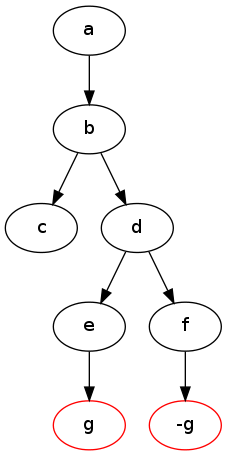

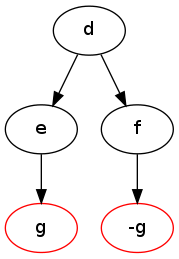

The trick I discovered while playing around with cached implied literals is that if literal L1 propagates L2 and also !L2 then that means there are conceptually two binary clauses in the solver (!L1, L2), (!L1, !L2), so !L1 is TRUE. This is of course trivial, but I never checked for this. The question most would raise is: why would L1 propagate both L2 and !L2 and not fail? The answer is kind of tricky, but very interesting. Let’s say at one point, L1 propagates L2 due to a learnt clause, but that learnt clause is then removed. A new learnt clause is then later learnt, and with that learnt clause in place, L1 propagates !L2. Now, without caching, this would be ignored. Caching memorizes past conceptual binary clauses and re-uses this information.

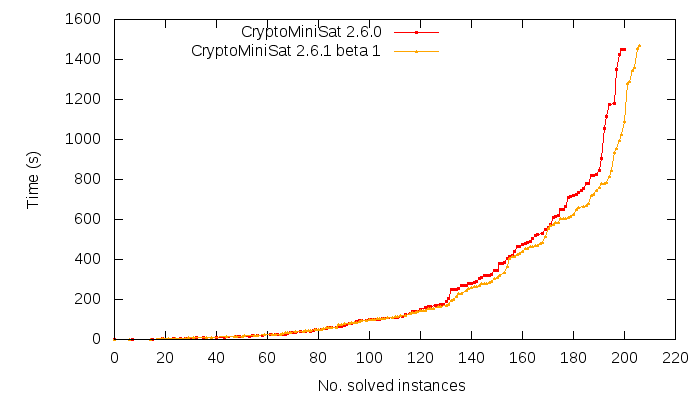

This is not an optimization that only looks good on paper, it is very good to have. With this one optimization, I gained 5 instances from the SAT Comp’09 instances with a 1000s timeout (196 solved -> 201 solved). I can’t right now imagine how this could be done with stamping effectively, but that doesn’t mean it’s not possible. Though, according to my experience, stamping doesn’t preserve that much information over time as it’s being updated (renumbered) frequently while the cache is only improved over time, never shrunk. A possibility would be to have more than one stamp system and round-robin selecting them. However that would mean that sorting of clauses (for shrinking) would need to be done more than once, and sorting them is already relatively expensive. I sometimes feel that what stamping gains in memory it looses on sorting (i.e. processing time) and lower coverage (re-numbering).

More precise time-limiting

Martin Maurer has been kind enough to file a lot of bug reports about probing and variable elimination taking too much time, sometimes upwards of 150s when they should take around 20-30s maximum. While investigating, it tuned out that the problem was very weird indeed. While trying to eliminate or probe one variable the time for that one variable took upwards of 100s. This was completely unexpected as the code only checked for timeouts on a per-variable basis. In the end, the code had to be improved to track time on an intra-variable basis in both systems. While at it, I also added intra-variable time-tracking to implicit clause subsumption and strengthening too. So, over-times should less prevalent from now on. As an interesting side-note, time-limiting on probing is now so fine-grained that a 32-bit unsigned integer would overflow within 15s if used as the time-tracker.