I have worked a lot on CryptoMiniSat 5.0 in the past months so I thought I’d write a little bit about what I spent my time on.

Amazon AWS

I have put lots of effort into use Amazon AWS service to run CMS. This is necessary in order to compete at the SAT competition where my competitors have access to massive resources, some to clusters having over 20k CPU cores. Competing against that with a 4-core machine like I did last year will simply not cut it.

The system I built has a client-server infrastructure where the server is a very-very small machine (t1.micro) that hands out jobs to very-very beefy client machine(s) (c4.8xlarge with 18 real cores). I need this architecture because the client I use is a so-called spot instance so Amazon can shut it down any time. The server makes sure to keep in mind what has been solved and what needs to be solved next to complete the job. At the finish of the job, both the server and the client shut down. I simply need to issue, e.g. “./launch_server.py –git 82c4e5adce –s3folder newrun –cnfdir satcomp091113 -t 5000” and it will launch the full SAT competition 09+11+13 instances with a 5000s timeout using a specific GIT revision of CryptoMiniSat. When it finishes (in about 4-5 hours), it (should) send me a mail with the command line to use to download all the data from Amazon S3. It’s neat, fast, and literally just one command line to use.

As for how much I have used it, I have spent over $100 on running costs on AWS in the past 2 months. A run like the one above costs about $2. Not super-cheap, but not the end of the world, either.

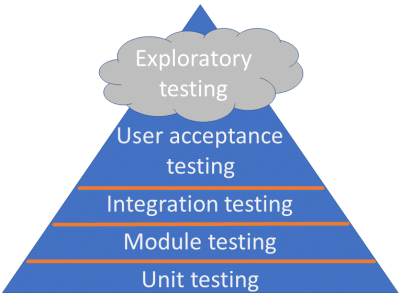

Testing and continuous integration

I have TravisCI, Coverity, and Coverall integration. These provide continious integration testing, static analysis, and code coverage analysis, respectively. I find TravisCI to be immensely valuable, I would have trouble not having it for a new project. Coverity is also pretty useful, it has actually found some pretty stupid mistakes I have made. Finally, coveralls has a terrible interface but I like the idea of having test code coverage analysis and it encourages me to put more effort into that. For example, it highlights pretty well the areas that I typically break when coding without realizing it. TravisCI usually warns me if there is something bad except when there is no (or too little) coverage. I am also looking into Docker, which would allow for continuous delivery.

Checking against SWDiA5BY

I have integrated the main idea of SWDiA5BY A26 code into CryptoMiniSat. Further, I am in the process of integrating one of thepatches available on the author’s website. I find these patches to be really interesting and using SWDiA5BY A26 as a check against my own system has allowed me to get rid of a lot of bugs. So, I am greatly indebted to the authors of MiniSat, Glucose and SWDiA5BY.

Conclusions

In the past months I have put a lot of effort into cleaning up, fixing, and taking control of CryptoMiniSat in general. There have been over 240 issues filed at github against CryptoMiniSat over the years, and only 7 are currently open. This is a testament to how open and dynamic the solver development is. In case you are interested in helping to develop or have new ideas, don’t hesitate to contact me. Further, if you have any commercial interest in the solver, don’t hesitate to contact me.