Finally, after many months of waiting for our paper to be accepted (preliminary PDF here), Ganak2 is finally released (GitHub code, released binaries) and easily accessible and modifiable. I wish this release had come earlier, but double-blind requirements didn’t allow us to release the code any sooner.

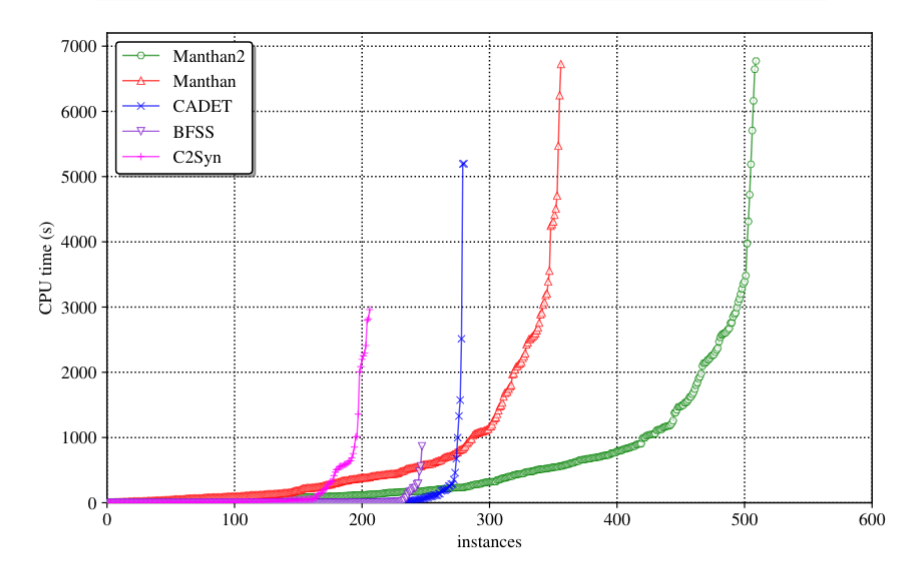

Basically, Ganakv2 has been re-written in many ways. Some of the original ideas by Thurley for SharpSAT has been kept alive, but many-many things are completely different and completely re-written — well over 10’000 lines of change in total. This set of changes has allowed Ganak2 to win every available track at the Model Counting Competition of 2024. In other words, Ganak2 is incredibly fast, making it a state-of-the-art tool.

Chonological Backtracking, Enhanced SAT solving, S- and D-sets

The new Ganak has a pretty powerful SAT solver engine with its own restart strategy, polarity and variable branching strategy. This SAT solver is tightly integrated into Ganak, and reuses the same datastructures a Ganak, hence the counter/SAT transitions are smooth. This relies on the observation that once every variable in the (minimal) independent set has been assigned, there is at most one solution, so we can just run a SAT solver, there is no need to a full-blown model counter. This idea has existed at least since GPMC, but we improved on it in important ways.

A very complicated but very important aspect of Ganak2 is our integration of Chronological Backtracking which we adopted to the model counting setting. This required a lot of attention to detail, and in order to debug this, we took full advantage of the fuzzer written and maintained by Anna Latour. We further added very serious and thorough checking into Ganak2, with varying levels of internal checking and per-node checking of counts. This allows to perform effective debugging: the first node that the count is incorrect will immediately stop and error-out, along with a full human-readable debug log of what had happened. This was necessary to do, as some of the issues that Chonological Backtracking leads to are highly non-trivial, as mentioned in the paper.

Ganak2 now understands something we call a D-set, which is a set of variables that’s potentially much larger than the projection set but when branched on, the count is the same. This allows Ganak to branch on far more variables than any other current model counter. We run an algorithm that takes advantage of Padoa’s theorem to make this D-set as large as possible. Further, Ganak2 takes advantage of projection set minimization, calling he minimal set an S-set, and running a SAT solver as soon as all variables from the S-set have been decided. This allows us to run a SAT solver much earlier than any other model counter.

Component Discovery, Subsumption and Strengthening, and Counting over any Field

Since the paper, I have also added a number of pretty interesting improvements, especially related to component generation, which is now a highly cache-optimized datastructure, using time stamping, a trick I learned from Heule et al’s brilliant tree-based lookahead paper (worth a read). I also changed the hash function used to chibihash, it’s not only faster (on the small inputs we run it on) but also doesn’t rely on any specific instruction set, so it can be compiled to emscripten, and so Ganak2 can run in your browser.

There is also a pretty experimental on-the-fly subsumption and strengthening that I do. It actually rewrites the whole search tree in order to achieve this, which is a lot harder to do than I imagined. It’s the most trivial subsumption & strengthening code you can imagine, but then the rewriting is hell on earth. However, the rewriting is necessary in case we want to be able to continue counting without restarting the whole counting process.

Ganak, unlike many of its counterparts, also manages memory very tightly and tries not to die due to memory-out. The target memory usage of the cache can be given via –maxcache in MB. A cache size of 2500MB is default, which should cap out at about 4GB total memory usage. More cache should lead to better performance, but we actually won (every available track of) the Model Counting Competition 2024 with rather low memory usage, I think I restricted it to 8-12GB — other competitors ran with ~2x that.

Furthermore, Arjun, our CNF minimizer has also been tuned. While this is not specifically Ganak, it gives a nice improvement, and has some cool ideas that I am pretty happy about. In particular, it runs the whole preprocessing twice, and it incorporates Armin Biere’s CadiBack for backbone detection. I wish I could improve this system a bit, because sometimes CadiBack takes >1000s to run, but without it, it’s even slower to count in most cases. But not always, and sometimes backbone is just a waste of time.

The system also now allows to use any field, all you need to do is implement the +/-/*/div operators and the 0 and 1 constants. I have implemented integers, rationals, modulo prime counting, and counting over a the field of polynomials with rational coefficients. All you need to do is override the Field and FieldGen interfaces. I made it work for modulo prime counting in under 20 minutes, it’s like 30 lines of code.

Floating Point Numbers

Notice that floating point numbers don’t form a field, because 0.1+0.2 is not equal to 0.2+0.1, i.e. it’s not commutative. Actually, Ganak2’s weighted model counting doesn’t currently support floating point numbers, because it doesn’t need to — we won literally every available track with infinite rational numbers: 0.3 is simply interpreted as 3/10. This sounds minor, but it also means that it’s actually trivial to check if Ganak runs correctly — it simply needs to be run with a different configuration and it should produce the same solution. Notice that this is not at all true for any other weighted model counter. Literally all of them, except for Ganak, will give different solutions if ran with different seeds. Which is hilarious, since there is obviously only one correct solution. But since 0.1+0.2 is not equal to 0.2+0.1 in floating point, they can’t do anything… rational.

This whole floating point saga is actually quite annoying, as it also means we can’t check Ganak against any other counter — at least not easily. All other counters give wrong answers, given that floating point is incorrect, and so we can only compare Ganak2 to other counters if we allow a certain deviation. Which is pretty hilarious, given that counters all claim to use “infinite precision”. In fact, the only thing infinite about their results is likely the error. Since (0.1+02)-(0.2+0.1) is not 0, it is actually possible to have theoretically infinite error in case of negative weights.

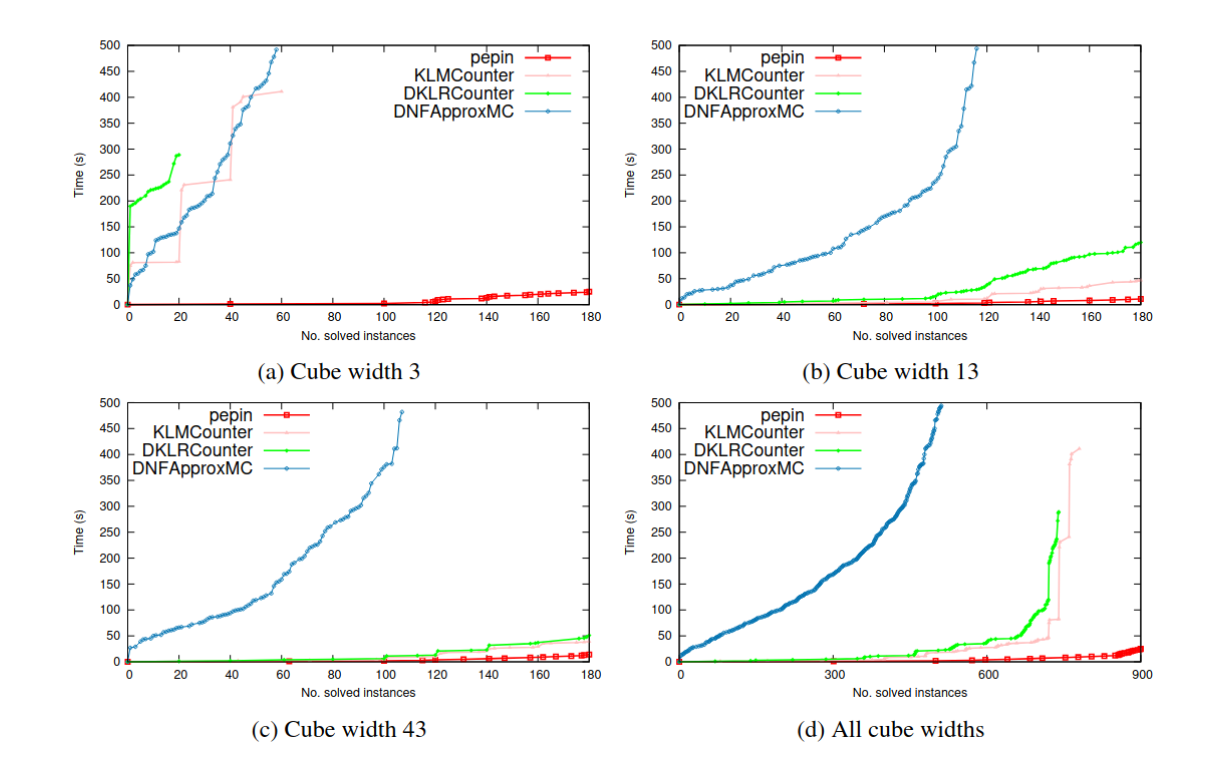

Approximate Counting and Ganak

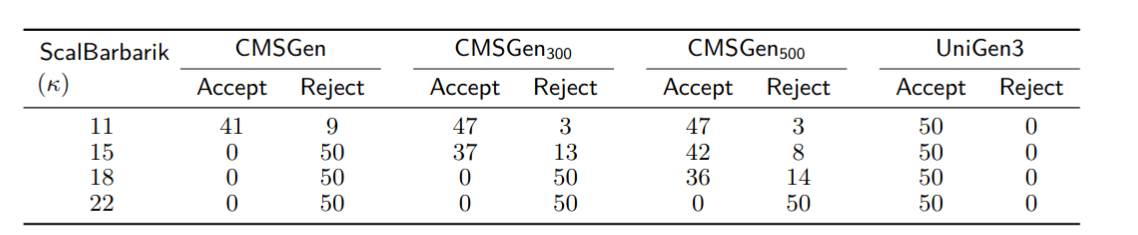

Ganak2 incorporates ApproxMC, and can seamlessly transition into counting via approximate model counting methods. To do this, simply pass the “–appmct T” flag, where T is the number of seconds after which Ganak will start counting in approximate mode. This can only be done for unweighted counting, as ApproxMC can only do unweighted counting. However, this seamless transition is very interesting to watch, and demonstrates that using a multi-modal counting framework, i.e. exact+approximate is a very viable strategy.

What happens under the hood is currently unpublished, but basically, we stop the exact counting, check how much we have counted so far, subtract that from the formula, and pass it to ApproxMC. We then get the result from ApproxMC and add the partial count that Ganak counted, and display the result to the user. So the final count is partially approximate, and partially exact, so we actually give better approximation (epsilon and delta) guarantees than what we promise.

Compiling Ganak

The new Ganak incorporates 8 libraries: GNU MP, CryptoMiniSat, Arjun, ApproxMC, SBVA, CadiCal, CadiBack, and BreakID. Furthermore, it and compiles in (optinally) BuDDy, Oracle (from Korhonen and Jarvisalo) and Flowcutter. These are all individually mentioned and printed to the console when running. However, this means that building Ganak is not trivial. Hence, with the very generous help of Noa Aarts, Ganak has a flake nix, so you can simply:

git clone https://github.com/meelgroup/ganak

cd ganak

nix-shellAnd it will reproducibly build Ganak and make it available in the path. All this needs is for one to have installed Nix, which is a single-liner and works incredibly reliably. Otherwise, the Ganak repository can be cloned together with its GitHub Actions, and then each push to the repository will build Ganak2 for 4 different architectures: Linux x86&ARM, Mac x86&ARM.

Submit your Version of Ganak to the 2025 Competition

Ganak2 is highly extensible/modifiable. I strongly encourage you to extend/change/improve it and submit your improvement to the Model Counting Competition of 2025. Deadline is in 3 weeks, it’s time to get your idea into Ganak and win. I promise I will have all my changes publicly available until then, and you can literally submit the same thing I will, if you like. I’d prefer if you put in some cool change, though.