I have been involved in computer security and security testing for a while and I think it’s time to talk about some aspects of it that get ignored, mostly for the worse. Let me just get this out of the way: security testing (or pentesting, if you like) and testing are very closely related.

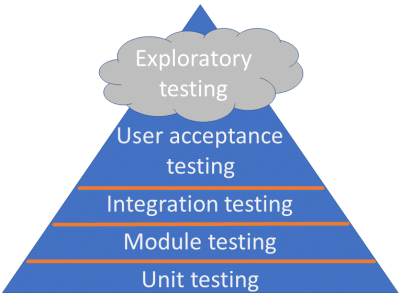

The Testing Pyramid

What’s really good about security testing being so close to testing is that you can apply the standard, well-know and widely used techniques from testing to the relatively new field of security testing. First of all, this chart:

This chart essentially means: you should have a lot of unit tests, less but many module and integration tests, a good number of user acceptance tests, and then perform some exploratory testing. The rough definition of exploratory testing is “simultaneous learning, test design and test execution”. If you have ever seen or performed a pentest, you will know that that’s precisely what we do. We come in, at a pretty steep price per day, learn how the system works, come up with test cases on the spot and execute a stream of tests, dynamically adjusting our test strategies to the application at hand. At the end we give a report to the client of what we found and throw away our application-specific test strategies.

Once one realises that security testing/pentesting is exploratory testing, the question of “what about the other test types?” comes naturally. Because the test literature will tell you in no uncertain terms: exploratory testing is the most expensive and least effective way to perform regular testing. It is great to find the one-off, odd things, and has its place in the testing strategy that one must employ. But it’s incredibly ineffective for tests that can be performed at the unit, module, integration or user acceptance level. And there are many of those.

An Example

I used to work at a large company where I was put in charge of the security of one of their products. Of course the first week I did exploratory testing — to see what the application did, how did its subcomponents fare in general, to get a feel for what needs to be prioritised. And then I started walking down that pyramid. I fixed up and added a lot of user acceptance tests, e.g. using Codenomicon, a black-box testing tool perfect for user acceptance testing-level tests. The team I worked at had a very extensive test system and test infrastructure, with well-defined strategies and many-many hooks into the system to make testing easier. These are incredibly important to have if you want to perform any kind of effective testing below the exploratory testing level. Once a bunch of security acceptance tests were set up and running as part of their continuous integration system, I went lower, to the module level, and added more effective, but more difficult to set up tests such as AFL-based feedback fuzzers. At this level, you’ll be finding bugs that are a lot easier to triage, fix and validate, but it takes longer to set up the system.

Limitations of Pentesting

You will be surprised how often security consulting companies are engaged to manually test a system where testers (i.e. humans) are manually firing off trivial inputs such as “<script>alert(1)</script>” into parameters and find bugs. Of course at this point, any sane tester would say: but why don’t we, security consultants, automate this within the scope of the engagement? It’d be kind of like an acceptance test, though still a lot more expensive, but at least fast, automated and the automation could be given to the client as a by-product of the engagement, lowering long-term costs. The reality is that one cannot just fire off those inputs for a number of reasons, mostly to do with poor/unavailable testing infrastructure or unavailable/non-existent test hooks. First of all, I cannot just spin up 20 virtual systems and fire these off in parallel. Typically there are many injection points and many inputs to fire off — remember, these multiply. When I was integrated into the test team, I could just launch a bunch of VMs and perform tens of millions of tests in a matter of (wall-clock) hours. VMs are lot cheaper than humans — testing infrastructure matters. Secondly, a lot of applications have controls (or poor code) in place that detect manipulation (or simply go haywire) and log out the user. Hence one must draw up strategies how to evade these controls. These controls should be turned off, but whenever I get to see the code I know that they cannot be turned off, the option is just not there. Testing hooks matter.

Swimming Against the Tide

Of course “<script>alert(1)</script>” and “‘ or 1=1;–” are not the end of all security testing. They are trivial inputs and don’t go deep at all. But do you really want to pay someone several hundred a day to send these? More complicated inputs can also be automated — Codenomicon and AFL will create some incredibly complex inputs if you correctly hook them up and give them a week or so of CPU time (not wall-clock time). Naturally, few if any automated tests will find issues with business logic on their own — e.g. one user making a transaction as another user. However, a good bunch of these can be automated too, e.g. by a hook that fixes down your session as one user and launching an automated tool, checking for transactions performed by other users. There are diminishing returns here, and some business logic and some deeper issues can probably only be revealed by a combination of threat modelling and exploratory security testing. But ignoring all unit/module/integration/user acceptance security tests will forever make you feel like you are swimming against a tide. It is not hard to find people responsible for information security in large organisations completely swamped, running from one disastrous pentest report and maybe even incident, to the next.

At this point, most likely you’re thinking: this all sounds good, but how will I get buy-in from upper&lower management and the development&test team to integrate security testing into standard processes? I can only talk from experience: you don’t sell security as strictly relating to “evil hackers”. I got buy-in partially also because ~80% of the security bugs that were found through all the lower-level tests also had a non-security impact (e.g. see Guy Leaver’s OpenSSL bug). Further, the inputs to exercise security issues were generally different (and often more concise and repeatable), hence issues that were known but were hard to poinpoint could finally be nailed to the exact line of code. Developers love fixing bugs that take less than 1 hour to go from bug report to fix committed. Find someone to help you draw up a strategy to add such tests and to help your developers write more secure code and soon you will no longer be swimming against the tide.

Hopes for More Interesting (and Valuable) Security Tests

If all the above sounds like I’m advocating against my own work, I’m not. I’m doing the exact opposite. I’m advocating for systems to have less trivial bugs, better test infrastructure and more test hooks so when I come in and perform an exploratory security test (pentest, if you like), I can have the most leverage possible to help. I will launch “<script>alert(1)</script>” if that’s what’s required, but I’d much rather help out with the more complicated stuff that needs specialised knowledge — from business logic through security test strategies to threat modeling. There is more value in there.