In OpenCL, the platform for using the graphics card as a form of highly parallelised machine, reading and writing to the memory of the card is very expensive. For this reason, the OpenCL standard offers a set of highly flexible ways of writing and reading data to- and from the OpenCL device. Using these flexible systems takes time to get used to, but seem to be necessary to get the most out of the graphics card.

The Problem

If data needs to be processed, a standard way of pushing it through the compute device is to use two instances of an OpenCL kernel: while one is executing, the system is reading out the finished data and uploading the new data to compute for the other kernel. Then the kernels switch places, kernel 2 is processing, while data is read-written for kernel 1. This requires the programmer to index every data piece (since there are now two of them), and make sure to get the last iteration right. This latter is tricky, because even if there is no longer data to process, kernel 2 may still be executing even though kernel 1 can now be stopped.

These difficulties make the system tricky to get right, though not impossible. In this blogpost I would like to share with you a nifty trick I have found that completely eliminates this complexity, significantly cleaning up code, while keeping the same speed.

The Solution

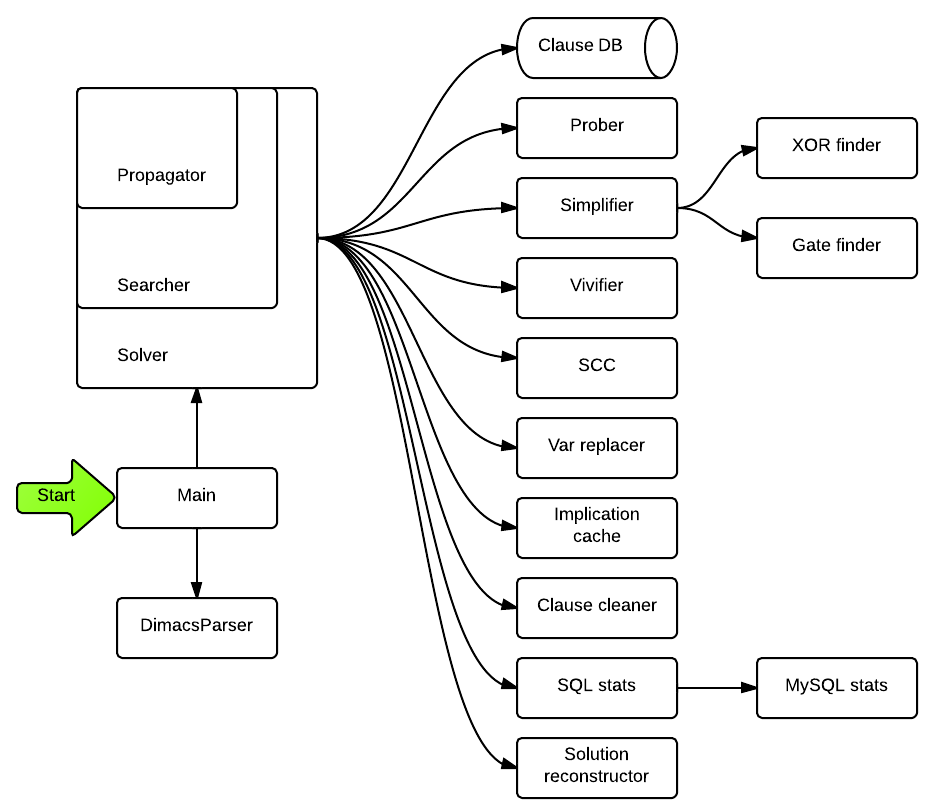

Since OpenCL 1.1, OpenCL supports multithreading. While managing threads is one of the most dreaded parts of programming, OpenMP makes things extremely simple. The architecture we will need is the following:

- A single-kernel system that churns through data. Let us call this Worker

- A simple class that keeps in mind which data pieces have been finished with. Let us call this Dealer

There will be two Workers, and one Dealer. When the Worker requests data from the Dealer, it does it inside a critical OpenMP directive. The Dealer is simply initialised with the data to read through, and deals this data out to the Worker upon request, and marks the data piece as ‘done’. If there are no more data to work on, the Worker exits. The only tricky part is the startup, which should look like:

//Don't allow dynamic number of threads

omp_set_dynamic(0);

//Set the number of threads we need

omp_set_num_threads(2);

//Initialise Dealer

Dealer dealer(my_options);

#pragma omp parallel

{

int thread = omp_get_thread_num();

int num_threads = omp_get_num_threads();

Worker worker(&dealer, my_options);

worker.compute();

}

It’s really simple, and works on all platforms where OpenMP is supported, which is pretty much everything, including Windows, Linux, embedded devices, etc. The really nice part about this architecture though is that it allows for way more than just getting around the problems with multiple kernels in the same source code.

The advantages

First of all, this architecture allows for the Dealer to do much more than just deal out data. You can set the number of threads to 3, set the workers to do their stuff, and wake up the Dealer every 5 seconds to dump the data, for instance. This only needs a “while(!finished) { wait(5sec); dump_my_data();}” loop in Dealer. Since the other threads can simply continue working while Dealer is writing to disk, this may be quite an important advantage in case the data you are generating is large. Similarly, Dealer could pre-load the data from disk that is to be dealt out to Workers.

Another very nice advantage of the above is that there can be any number of Workers, which sounds crazy until you get your hands on a multi-GPU computer. To take advantage of all GPUs in the system, the Workers simply need to be paremetrised with the device number that we wish to use. For example, if we have two OpenCL devices on a machine, we need to launch 4 threads, with device numbers 0,0 and 1,1. If the data chunks to compute are small relative to the overall data, e.g. data chunks need approx. 1 minute to process, but the overall data takes more than an hour, the dealer will distribute the load pretty evenly among the GPUs, so the GPUs can be even of a mixed speed, and it won’t affect the overall finish time much.

Conclusions

Although the proposed architecture uses multi-threading, something that most programmers fear (sometimes rightly so), it leads to a significantly cleaner and more flexible code that can do more with less. Using this architecture, the code should be easier to write, maintain, and extend — something all good programmers strive for.

I have been using the AMD’s

I have been using the AMD’s