Version 2.6.0 of the CryptoMiniSat SAT solver has been released. The new release incorporates a number of important additions and discoveries. Additions include a better memory manager and better watchlists, while the discoveries include an interesting use of asymmetric branching and an on-the-fly self-subsuming resolution algorithm.

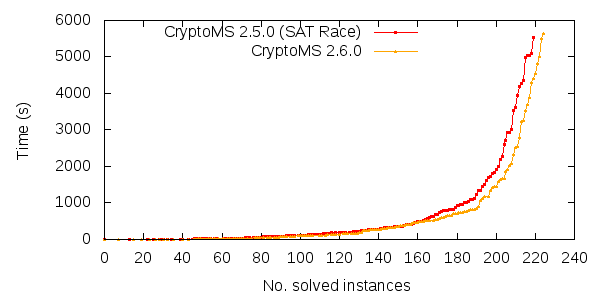

The new solver can now solve 221 problems from the SAT Competition of 2009 within the same timing&CPU constraints, while the 2.5.0 (i.e. SAT Race) version could only solve 217. Here is a comparison plot:

The cut-off for the competition for these machines was approx at 5000 sec. As can be seen from the graph (which goes until 6000 sec), the new solver does even better in the longer run: the additions seem to improve the long-term behaviour.

As for the future, I think there is still a lot of things to do. For example, the solver still doesn’t have blocked clause elimination, which could help, and it is still missing some ideas that others have published. Notably, it doesn’t do on-the-fly subsumption at every step of the conflict generation process, only at the very last step. In case you are interested to add any of these in a transparent manner, feel free to hack away at the git repository.

Edited to Add (3/09/2010): The performance of CryptoMiniSat on the SAT Race 2010 problems has also changed with the new version. The new CryptoMiniSat can now solve 75 problems (instead of 74) within the time limit. More importantly, the average time to solve these 75 instances has decreased considerably, to around 111 seconds per instance (from 138 s/inst), which is very close to the results of lingeling, an extremely fast and very advanced solver. There is still a lot to be learnt from lingeling, however: its memory footprint is far smaller, and its preprocessing techniques in some areas are much better than that of CryptoMiniSat.