Lately, I have been putting a lot of effort into collecting data about solver behaviour and dumping it on-the-fly to a MySQL database. Some of this data is then presented by a PHP&javascript interface, such as this.The goal is to better understand how solving works, and thus to possibly come up with better strategies for solving. The requirements of the data gathered are: speed to calculate, size to store and usefulness.

Gathered data

There are some obvious statistics that one would like to collect, such as number of conflicts, propagations and conflicts per second, etc — these are all gathered by almost every SAT solver out there. However, it would be interesting to have more. Here is what CryptoMiniSat now gathers and dumps using highly efficient prepared bulk insert statements, using at most 5% of time.

For each variable, dumped once in a while:

- no. times propagated false, and true (separately)

- no. times decided false and true (sep.)

- no. times flipped polarity

- avg & variance of decision level

- avg & variance of propagation level

For each clause larger than 3-long, dumped once in a while:

- activity

- conflict at which it was introduced (if learnt)

- number of propagations made

- number of conflicts made

- number of times any of its literal was looked at during BCP

- number of times it was looked at (dereferenced) during BCP

- number of times used to resolve with during learnt clause generation

- number of resolutions needed to during its generation (if learnt clause)

For earch restart:

- no. of reducible&irreducible (i.e. learnt&non-learnt) binary clauses

- no. of red&irred tertiary clauses (separately)

- no. of red&irred long clauses (sep)

- avg, variance, min and max of learnt clause glues

- avg, var, min, max of learnt clause sizes

- avg, var, min, max of number of resolutions for 1st UIP

- avg,var,min,max of branch depths

- avg,var,min,max of backjump length –in the number of literals unassigned

- avg,var,min,max of backjump lenght — in the number of levels backjumped

- avg, var, min, max times a conflict followed a conflict without decisions being made

- avg,var,min,max of agility

- no. of propagations by red&irred binary clauses

- no. of props by red&irred tertiary clauses

- no. of props by red&irred long clauses

- no. of conflicts by red&irred binary clauses (separately)

- no. of conflicts by red&irred tertiary clauses (sep)

- no. of conflicts by red&irred long clauses (sep)

- no. of learnt unit, binary, tertiary, and long clauses (sep)

- avg,var, min,max of watchlist size traversed during BCP

- time spent

- no. of decisions

- no. of propagations

- no. of variables flipped

- no. of variables set positive&negative (sep)

- no. of variables currently unset

- no. of variables currently replaced

- no. of variables currently eliminated

- no. of variables currently set

For each learnt clause database cleaning:

- for reducible clauses removed: all the data in the “clauses larger than 3-long” data above, summed up

- for reducible clauses not removed: all the data in the “clauses larger than 3-long” data above, summed up

- no. of clauses removed

- no. of clauses not removed

- for all irred clauses (these are not touched): all the data in the “clauses larger than 3-long” data above, summed up

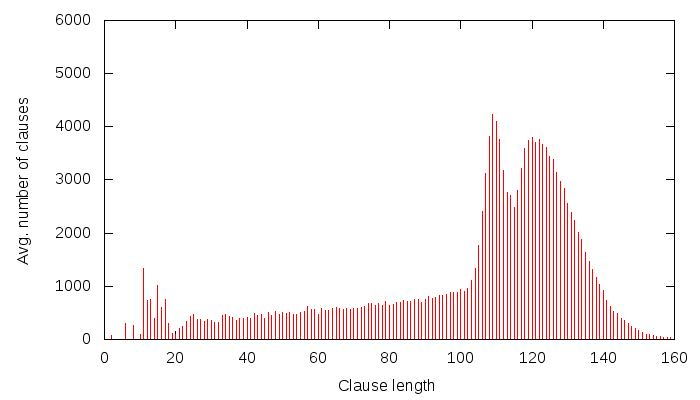

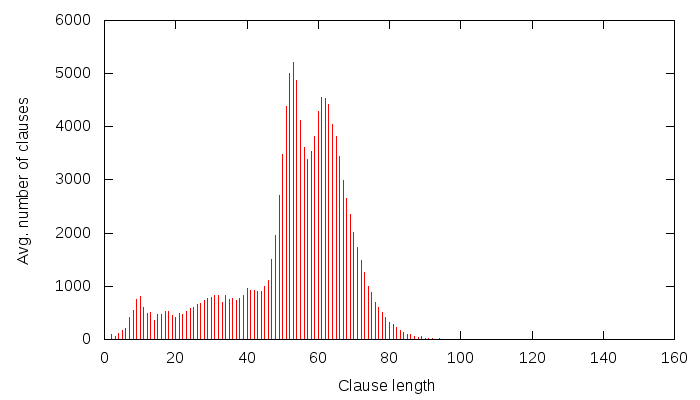

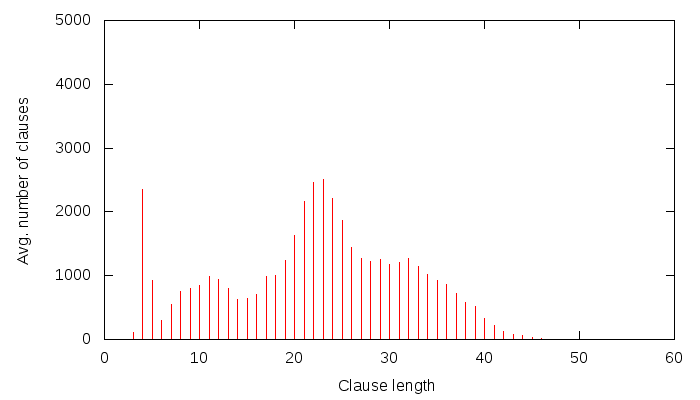

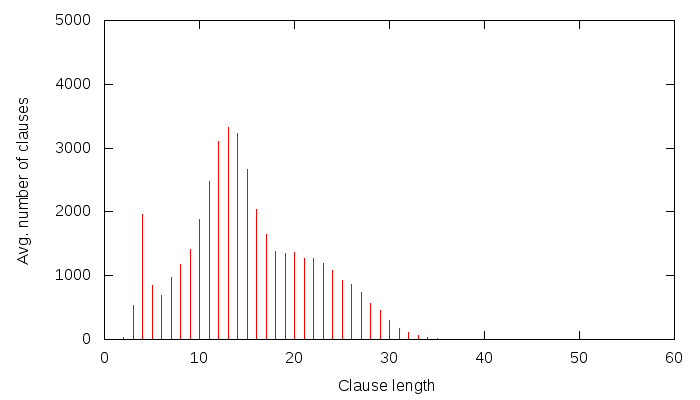

For every N conflicts:

- clause size distribution

- clause glue distribution

- clause size and glue scatter data

This is all, and is not all mine. Suggestions were made by many, some as much as a year ago: George Katsirelos, Luca Melette, Vegard Nossum, Valentin-Mayer Eichberger, Ben Schlabs, Said Jabbour and Niklas Sörensson. Naturally, none of these people necessarily approve of how I gather data or what I do with the data gathered, but they helped, so listing them is only fair.

What’s not yet or cannot be gathered

Two suggestions are still on the TODO list:

- counting the number of conflicts done while a learnt clause was “locked”, i.e. has propagated in the current search tree. This stat of a learnt clause could tell us if the clause seemed essential to the search or not. If a clause propagated once, at the bottom of the search tree, and then the variable propagated was quickly unset, it’s not the same as if the same clause propagated at the top of the search tree, and then the variable it set was essentially never unset.

- for each variable, counting the number of conflicts done while the variable was set. This is interesting, because if a variable was propagated only once, at top-level, it will seem like it was never set (set only once), yet it may have had a huge influence on the search tree and consequently on the learnt clauses.

Both of these require a change in the algorithm used to backjump and although I am a bit worried about the performance, I will add these soon.

Unfortunately, the data about variables can’t really be dumped so often, because it’s huge for large problems with millions of variables. So I guess I will only dump that for the most active N variables, for some sane definition of “active”. Similarly, the data about individual clauses is not dumped, only in a summed-up way during database cleaning.

Suggestions?

In case you have any ideas what else could be interesting to dump, please put it as a comment. The idea is to dump data that is cheap to compute and cheap to dump yet would be interesting for some reason or another. I am prepared to add stuff to datastructures, as you have probably guessed from the above. Yes, it makes the clause database less space-efficient, and so increases cache-miss. But on large problems, it’s going to be a cache-miss most of the time anyway, and a cache-fetch brings in 128B of data, which should be plenty for both the stats and the clause. Similarly with variables. So, don’t refrain from suggesting some stuff that takes space. Also, weird stuff is interesting. The most weird stat on the list above is definitely the “conflict after a conflict without decisions” (suggested by Said Jabbour) which I would have guessed to be zero, or near-zero, yet is pretty high, in the 30+% range.

Suggestions are welcome!

In case you’ve missed it, the

In case you’ve missed it, the