After many months of work, CryptoMiniSat 5.8.0 has been released. In this post I’ll go through the most important changes, and how they helped the solver to be faster and win a few awards, among them 1st place at the SAT incremental track, 3rd place SAT Main track, and 2nd&3d place in the SMT BitVector tracks together with the STP and MinkeyRink solvers.

Gauss-Jordan Elimination

First and foremost, Gauss-Jordan elimination at all levels of the search is now enabled by default. This is thanks to the work detailed in the CAV 2020 paper (video here). The gist of the paper is that we take advantage of the bit-packed matrix and some clever bit field filters to quickly check whether an XOR constraint is propagating, conflicting, or neither. This, and a variety of other improvements lead to about 3-10x speedup for the Gauss-Jordan elimination procedure.

With this speedup, the overhead is quite small, and we enable G-J elimination at all times now. However, there are still limits on the size of the matrix, the number of matrices, and we disable it if it doesn’t seem to improve performance.

As a bit of reflection: our original paper with Nohl and Castelluccia on CryptoMiniSat, featuring Gauss-Jordan elimination at all levels of the search tree was published at SAT 2009. It took about 11 years of work, and in particular the work of Han and Jiang to get to this point, but we finally arrived. The difference is day and night.

Target Phases

This one is really cool, and it’s in CaDiCaL (direct code link here) by Armin Biere, description here (on page 8). If you look at the SAT Race of 2019, you will see that CaDiCaL solved a lot more satisfiable problems than any other solver. If you dig deep enough, you’ll see it’s because of target phases.

Basically, target phases are a variation of phase saving, but instead of saving the phase all the time when backtracking, it only saves it when backtracking from a depth that’s longer than anything seen before. Furthermore, it is doing more than just this: sometimes, it picks only TRUE, and sometimes it picks only FALSE phase. To spice it up, you can keep “local deepest” and “global deepest” if you like, and even pick inverted phases.

It’s pretty self-explanatory if you read this code (basically, just switching between normal, target, inverted, fixed FALSE, fixed TRUE phases) and it helps tremendously. If you look at the graphs of the SAT 2020 competition results (side no. 19 here) you will see a bunch of solvers being way ahead of the competition. That’s target phases right there.

CCAnr Local Search Solver

CryptoMiniSat gained a new local search solver, CCAnr (paper here) and it’s now the default. This is a local search solver by Shaowei Cai who very kindly let me add his solver to CryptoMiniSat and allowed me to add him as an author to the version of CryptoMiniSat that participated in the SAT competition. It’s a local search solver, so it can only solve satisfiable instances, and does so by always working on a full solution candidate that it tries to “massage” into a full solution.

Within CryptoMiniSat, CCAnr takes the starting candidate solution from the phases inside the CDCL solver, and tries to extend it to fit all the clauses. If it finds a satisfying assignment, this is emitted as a result. If it doesn’t, the best candidate solution (the one that satisfies the most clauses) is saved into the CDCL phase and is later used in the CDCL solver. Furthermore, some statistics during the local search phase are saved and then injected into the variable branching heuristics of the CDCL solver, see code here.

Hybrid Variable Branching

Variable branching in CryptoMiniSat has always been a mix of VSIDS (Variable State Independent Decaying Sum, paper here) and Maple (multi-arm bandit based, paper here) heuristics. However, both Maple and VSIDS have a bunch of internal parameters that work best for one, or for another type of SAT problem.

To go around the issue of trying to find a single optimal value for all, CryptoMiniSat now uses a combination of different configurations that is parsed from the command line, such as: “maple1 + maple2 + vsids2 + maple1 + maple2 + vsids1” that allows different configurations for both Maple and VSIDS (v1 and v2 for both) to be configured and used, right from the command line. This configuration system allows for a wider variety of problems to be efficiently solved.

Final Remarks

CryptoMiniSat is now used in many systems. It is the default SAT solver in:

- QBF solver Caqe, which regularly wins QBF competitions

- SMT solvers STP and MinkeyRink , SMT competition results here and here, regularly placing 2nd and 3rd in the QF_BV track

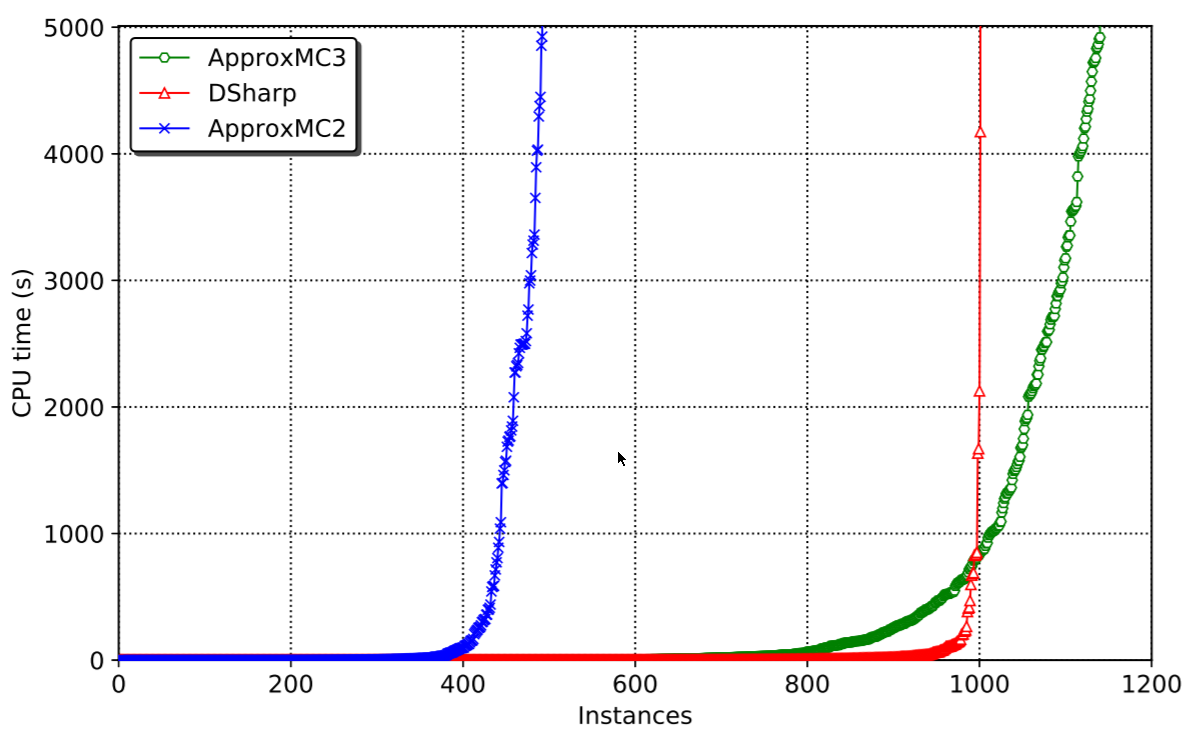

- Model counting system ApproxMC, which seems to have won 2 of the 3 Model Counting Competition 2020 awards

- Uniform sampling system Unigen

- ANF solving system Bosphorus

I think the above, especially given their track record of achieving high performance in their respective fields, show that CryptoMiniSat is indeed a well-performing and reliable workhorse. This is thanks to many people, including, but not limited to, Kuldeep Meel, Kian Ming A. Chai, Trevor Hansen, Arijit Shaw, Dan Liew, Andrew V. Jones, Daniel Fremont, Martin Hořeňovský, and others who have all contributed pull requests and valuable feedback. Thanks!

As always, let me know if you have any feedback regarding the solver. You can create a GitHub issue here, and pull request here. I am always interested in new use-cases and I am happy to help integrate it into new systems.