Marijn Heule kindly sent me an email on the 10th of April about DRUP, the new system used this year in the SAT Competition’13 for the UNSAT track. He kindly encouraged me to implement the DRUP system. He personally implemented it into Minisat which was a very helpful lead for me. In this post I will talk about my experiences in implementing DRUP into CryptoMiniSat within a span of 3 days.

Implementation complexity

It took only 3 days, about 1400 lines of code to implement DRUP:

git diff f27c74bbd c0b6ccc10 --shortstat

25 files changed, 1372 insertions(+), 307 deletions(-)

It turns out that the biggest problem is that whenever I shorten a clause, I first have to add the shortened version, and then delete the old one. Since I always do in-place literal deletion, this means I have to save the old clause into a temporary place, add the new one and finally delete the old one that has been saved. I will eventually write a C++ wrapper that does this for me, but currently, it’s a lot of

vector origCl(cl.size());

std::copy(cl.begin(), cl.end(), origCl.begin());

[blah...]

drup << cl << " 0" << endl;

drup << "d " << origCl << " 0" << endl;

So, it's a bit messy code. Other than this, the implementation went very smoothly. The biggest pain was not to forget to add to the DRUP output all changed clauses. Since I have implicit binary and tertiary clauses and I manipulate them in-place, they are changed in quite complicated code paths.

If you don't have such complicated code paths, you should be able to implement DRUP within a day or less. I encourage you to do so, it's quite fun!

Remaining uncertainties

I am a bit confused about whether some of the optimisations in CryptoMiniSat work with DRUP. I have been fuzzing the DRUP implementation for about ~1000 CPU hours, but not with all optimisations turned on. Some are a bit shaky. In particular, XOR and stamping&caching come to mind.

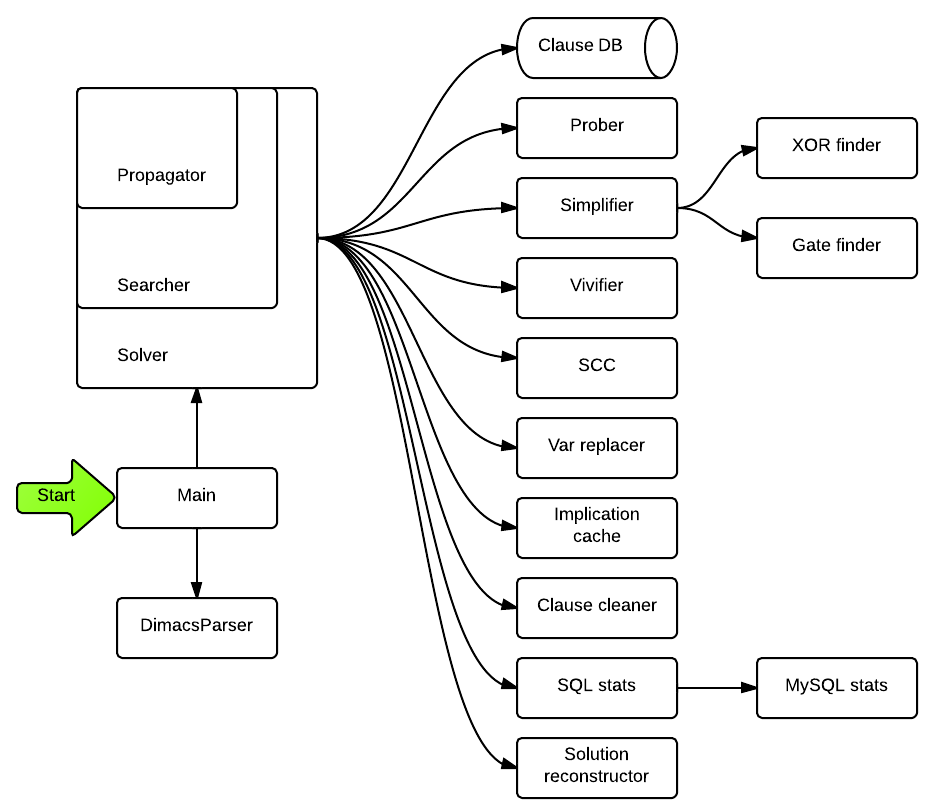

I cannot turn DRUP on for the top-level XOR manipulation because otherwise I would need to tell DRUP every Gaussian elimination step. Not funny, and not fast. Well, XOR is not such a big thing, and it is no longer natively implemented in CryptoMiniSat, so not a big deal, really.

The other, more troubling one is stamping and implied literal caching. Luckily I have on-the-fly hyper-binary resolution (this is needed for DRUP with Stalmarck if you think about it), so the binary clauses stored by caching and stamping are there... but they may get deleted by variable elimination, blocked clause elimination and... well, maybe nothing else. Hopefully not. Anyway, I never block binary clauses (does clause blocking ever help? I am confused) and I can of course not delete binary eliminated clauses from DRUP. However... that may make the verification very slow. So, I am at crossroads here. I think I will submit a version with stamping&caching and one without.

In the end, every optimisation can be turned on except for XOR. I find that exceptionally good given the number of tweaks/hacks used by CryptoMiniSat.

Long-term advatages of having DRUP

I think DRUP allows for a lot of possibilities. Naturally I first want to draw resolution graphs. There are plenty of libraries for 3D drawing, and I have already ordered the LEAP controller (a 3D controller), which will come handy to play with the resolution graphs (zoom&out, rotate, etc.).

From there, I want to get stats out of the graph, and I want to present it next to/with the stats that I already generate. For example, how many of the deleted clauses get re-learnt later? How many clauses get used in the resolution graph with the empty node? How often when cleaning with glues? How often when cleaning with activities? For which types of instances?

Linking this with real-world instances by coloring the graph points according to e.g. filter functions in stream ciphers is not very hard and should be quite a lot of fun.

Acknowledgements

I think Marijn Heule deserves a lot of thanks for the work he has put into DRUP (webpage, example, DIFF for MiniSat) and all the help he has given me. I had some initial doubts about whether it's possible to implement at all and I had some minor problems with the checker --- he always replied kindly and promptly. Thanks!